Cross-Asset Pairings

Storyblocks’ Machine-Learning Recommendation System

Storyblocks, a subscription-based collection of exclusive, royalty-free content, needed to streamline the content discovery for small business and enterprise video creators.

This new, machine-learning-driven recommendation platform surfaced better content discovery while reducing search time. I led the end-to-end redesign of the new Content Merchandising workflow, overseeing the necessary overhaul of key interface elements, and co-led with the Group PM in building the product strategy, cross-functional collaboration, and feature definition.

The business problem

Storyblocks had a high volume of video downloads but a noticeable delta in audio downloads. We also started to hear more from both SMB and ENT customers that they felt their content discovery journey took up a significant portion of their project timelines.

Lastly, users expressed interest in understanding how results were being surfaced in our current alogorithm. With the rise of LLMs and AI, our customers naturally wanted to better understand how their site interactions impacted their content creations. Over 60% of users interviewed expressed interest in Storyblocks somehow assuring them that any content they surfaced for their video production was unique.

Even with the current, non machine-learning based search algorithm, there was an inherent lack of trust; users were growing concerned they were only being given top-download results that were being used by other content creators.

Content product leadership – myself, our Group PM, and our head user researcher and data analyst – set out to increase audio downloads by a minimum of 25% and significantly improve user discovery time for all media types, all while building user trust through transparency.

Understanding the problem space

I teamed up with our data analytics team to pore over site analytics reports and and conducted a series of user interviews with our user research. We found that the traditional way of organizing different stock media was the crux of both problems. That is, to have completely separate buckets per media type. Videos took users directly to a video media bucket, music to another, and images yet another.

This old way of doing things deeply shaped Storyblocks’ entire user experience and backend infrastructure. Our data and customer interviews surfaced the unintended way we were forcing users’ content discovery into a very linear, time consuming hunt for the perfect video first, then an equally taxing search for audio.

Over 70% of our users would start their content discovery with video, only moving on to search for audio once they felt they had the right video content. Over one third of those users would have to revisit their original video searches once they had found the perfect audio.

The impact was hours sunk into content discovery, much of that time wasted because of the linear back-and-forth between video and audio. There was also very real user fatigue, and with users’ natural emphasis on video-first, audio downloads were taking a big hit as a result.

The solution

Alongside my team of cross-functional Content product leadership, we identified our plan:

Use machine-learning to automate the laborious aspects of content discovery.

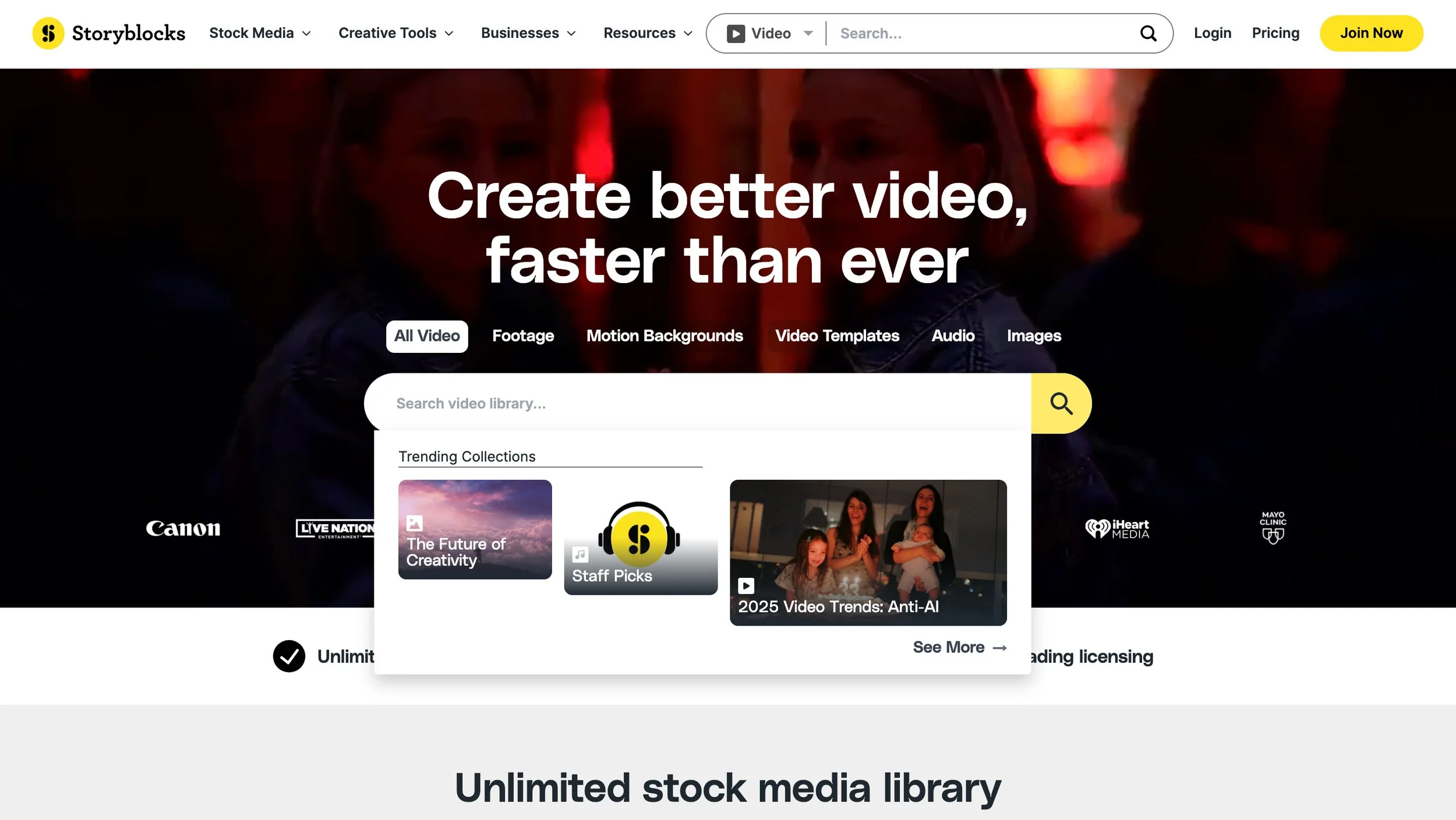

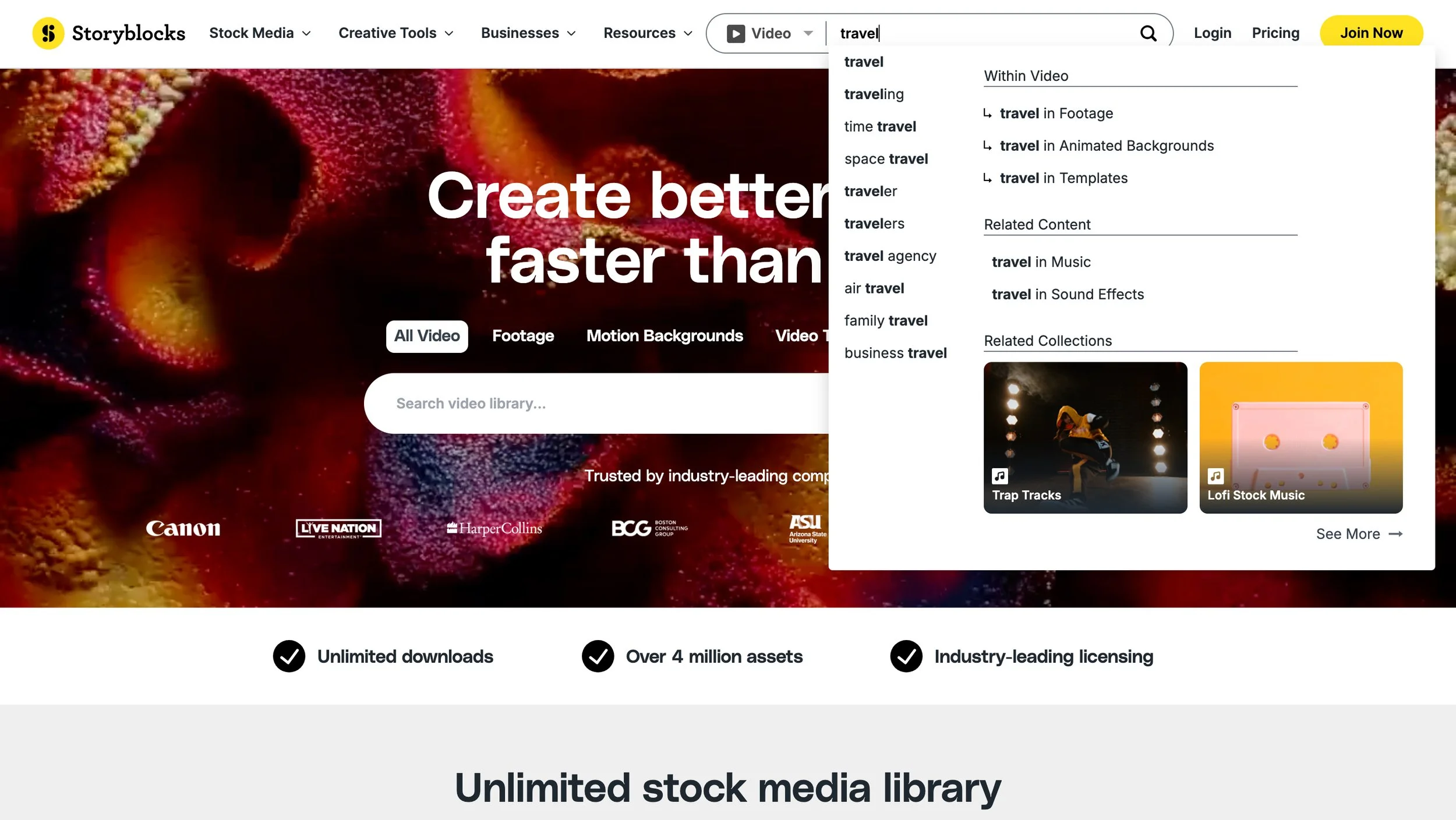

Break the illusion that the content discovery experience is linear. Update everything about the Storyblocks site experience to reflect this by overhauling the discovery workflow, global navigation, and search bar functionality.

Maintain user trust and confidence. The user experience needed to be completely transparent when in the discovery process machine-learning was being used, eliciting clear feedback to help it learn.

New User Experiences

User Engagement

I partnered with our head user researcher to write, run and assess a series of customer interviews, usability, preference, and copy tests in order to inform greater Product Requirements.

-

Copy tests and prototype testing resulted in:

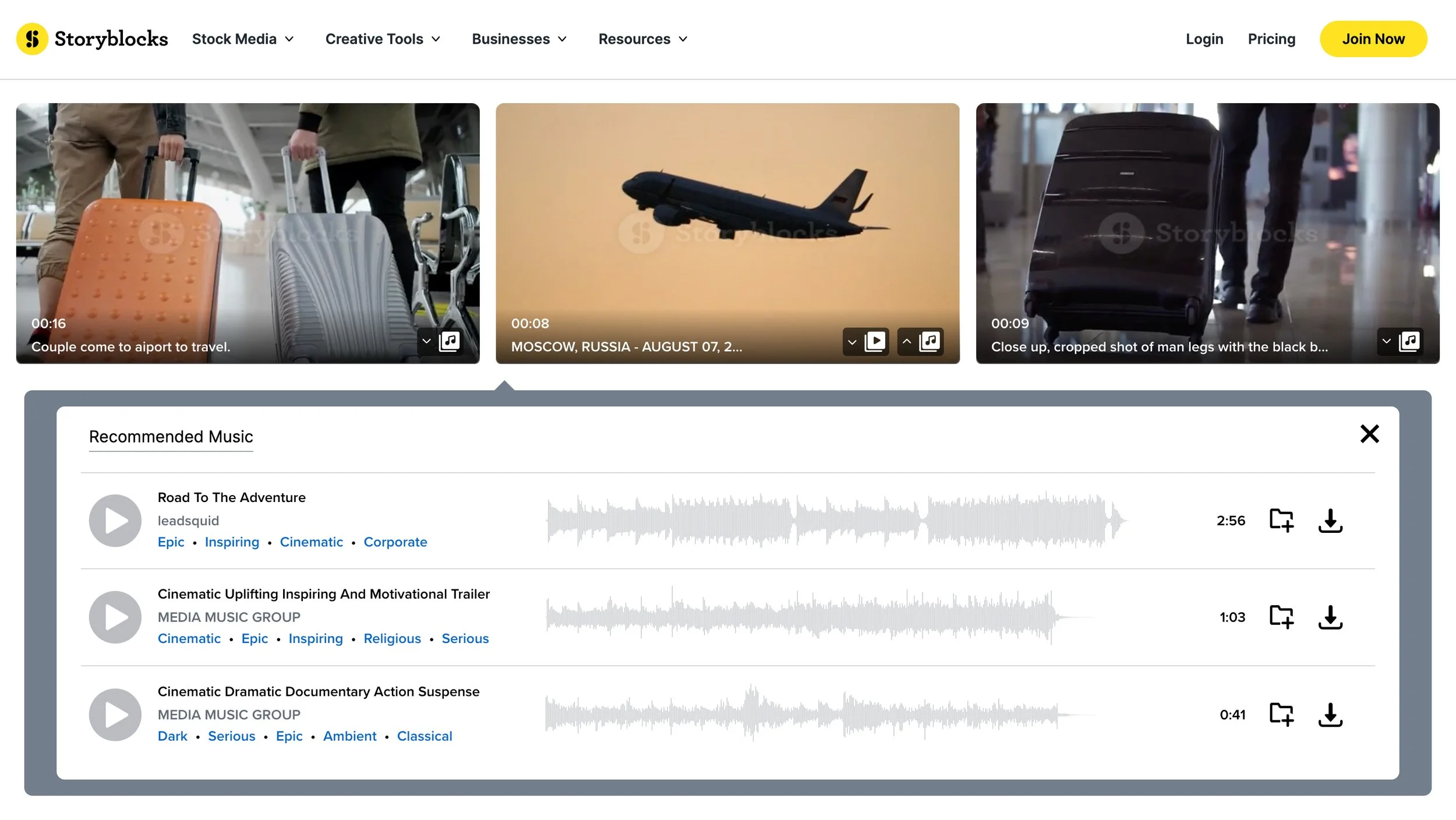

“Recommended music” performed better than “Suggested music”

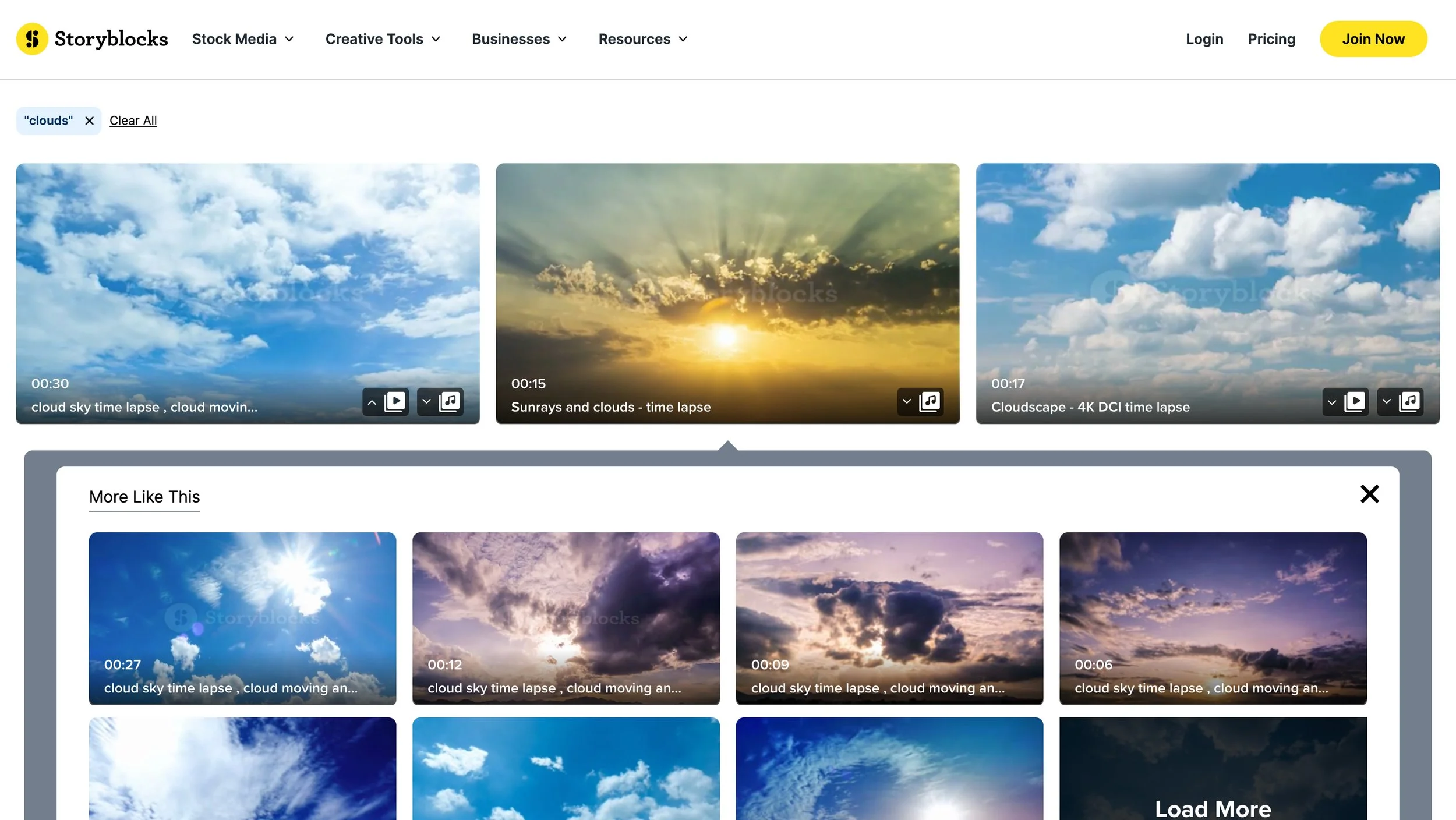

“More like this” performed better than “Search similar”

Video results thumbnails should let the content shine. Users did not want to see any expanding actions until on-hover.

Adding complementary text for all on-hover thumbnail actions improved user engagement by 35%.

Vibrant, persistent active states for opened “Recommended music” and “More like this” drawers

-

Customer interviews that helped us identify:

Most users found the existing Search Bar experience to be overly simple, unhelpful and frustrating

While the majority preferred to begin content discovery with video searches over audio, all participants thought about both media types at every stage of their journey

Users expected recommended media collections (curated by Storyblocks) to be surfaced at this level

-

Since the individual buckets of video, audio, and image content needed to be combined, I ran a series of copy tests to inform the best way to refer to combined media:

“Stock media” dramatically outperformed the other options put forward by our GPM, “Content” and “Stock content”

Instilled confidence (including in the c-suite) that the new way of navigating all media would be easily understood

The Cross-Asset Pairing system, with the power of the new recommendation drawers, led to a cumulative 30% increase in both video and audio downloads. Users also reported significantly more streamlined, faster content discovery, empowering them to jump into content production faster.

The updated global navigation, including the bundling of all content types under “Stock Media,” intuitively supported the Cross-Asset Pairing system, contributing to the project’s overall success and is still used to this day.

The new and improved Search Bar, including our featured collections in the null state, resulted in a 60% increase in Collections traffic, a 30% increase in audio traffic, and 10% increase in sound effects traffic.

These new experiences are still improving content creators’ lives today, almost 3 years since their inception.